Understanding Microcontroller Performance Analysis Techniques

投稿人:DigiKey

2011-07-08

Benchmarks let you compare processors, but there is still plenty of variability. Understanding and running standard benchmarks can give designers greater insight into and control over their applications.

It has never been easy to compare microprocessors. Even comparing desktop or laptop computers, which typically have processors that are all variants of the same fundamental architecture, can be frustrating as the one with faster numbers may run achingly slow compared to a "worse" one. Things get even tougher in the embedded world, where the number of processors and configurations is practically infinite.

Benchmarking is the usual solution to this conundrum. For many years, the Dhrystone benchmark (a play on the Whetstone benchmark, which includes the floating-point operations which Dhrystone omits) was the only game in town. However, it has a number of significant issues. Chief among them is the fact that it doesn’t reflect any real-world computation, it simply tries to mimic the statistical frequency of various operations. In addition, compilers can often do much of the computing at compile time, meaning the work doesn’t have to be done when the benchmark is run.

The real test of a benchmark is that, when looking in detail at results (especially ones that initially look strange), you can rationalize why the results look the way they do. An ideal benchmark would provide a score that purely reflected the processor’s performance capabilities, irrespective of the rest of the system. Unfortunately, that’s not possible because no processor acts in isolation: all processors must interact with memory – cache, data memory, and instruction memory, each of which may or may not run at the full processor frequency. In addition, these processors must all run code generated by a compiler, and different compilers generate different code.

Even the same compiler will generate different code depending on the optimization settings chosen when you compile your code. Such differences cannot be avoided, but the main thing to avoid is having the actual benchmark code optimized away.

Even though results might depend on the compiler and memory, you should be able to explain any such results only in terms of the processor itself, the compiler (and settings), and memory speed. That was not the case with the Dhrystone benchmark. However, the more recent CoreMark benchmark from the Embedded Microprocessor Benchmark Consortium (EEMBC) has overcome these deficiencies and proven to be much more successful.

EEMBC developed and publicly released the CoreMark benchmark in 2009 (downloaded now by more than 4,100 users). It has been adapted for numerous platforms, including the Android. The developers took specific care to avoid the pitfalls of older benchmarks. By looking at how the CoreMark benchmark works, as well as some example results, we can see that it not only can be a reliable indicator of performance, but it can also help to identify where microcontroller and compiler performance can be improved.

The CoreMark benchmark program

The CoreMark benchmark program uses three basic data structures to represent real-world work. The first structure is the linked list, which exercises pointer operations. The second is the matrix; matrix operations typically involve tight optimized loops. Finally, state machines require branching that is hard to predict and which is much less structured than the loops used for matrix operations.

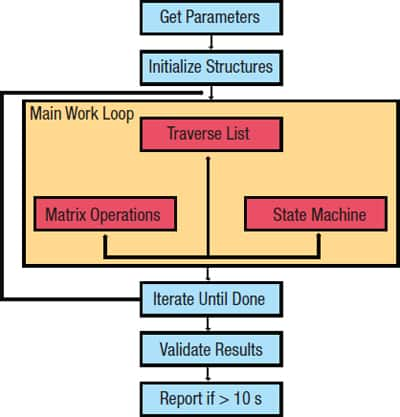

In order to remain accessible to as many embedded systems as possible, big and small, the program has a 2 kb code footprint. Figure 1 illustrates how the program works. The first two steps might seem trivial, but they are actually critically important – they are the steps that ensure the compiler can’t pre-compute any results. The input data to be used are not known until run time.

|

| Figure 1: CoreMark benchmark process. |

The bulk of the benchmark workload happens in the main work loop. One of the data structures, a linked list, is scanned. The value of each entry determines whether a matrix operation or a state-machine operation is to be performed. This decision-operation step is repeated until the list is exhausted. At that point, a single iteration is complete. The work loop is repeated until at least 10 seconds have elapsed. The 10-second requirement is imposed to ensure enough data to provide a meaningful result. If it runs for less than this, then the benchmark program will not report a result. However, this time requirement can be modified if the user runs the benchmark on a simulator.

After the main work is completed, the common cyclic redundancy check (CRC) function is utilized; which acts as a self-check to ensure that nothing went wrong (by accident or otherwise) during execution. Assuming everything checks out, the program reports the CoreMark result. This number represents the number of iterations of the main work loop per second of execution time.

While most benchmark users are honest, it’s always important to ensure that any benchmarking scheme has protections against abuse. There are two primary ways that someone could try to tamper with the results: editing the code (other than within the porting layer), since the code is necessarily provided in source form, and simply faking results. The CRC helps to detect any issues that might arise if the code is corrupted, and certification by the EEMBC Technology Center is the final arbiter. No one is required to have their results certified, but certification adds significant credibility since it confirms that a neutral third party achieved the same numbers.

Adapting the benchmarking runs

While the program looks to user parameters for initializing the data, you do not explicitly provide the raw data. That would be too much work, and it would also open up the possibility of manipulation through careful selection of initialization data. Instead, the program looks for three-seed values which must be set by the user.

These numbers direct the initialization of values in the data structures in a way which is opaque to the user. While they act as "seeds," there is no random element to this. The structures are completely deterministic, and multiple runs of a benchmark with the same seeds will result in identical execution and results.

You may also need to adapt the benchmark to account for the way in which your system allocates memory. Systems with ample resources can simply use the heap and malloc()calls. This allows each allocation of memory to be made when needed and for exactly the amount of memory needed. That flexibility comes at a cost, however, and better systems need faster ways of using memory.

The fastest approach is to pre-allocate memory entirely, but with linked list operations that is not feasible. An intermediate approach is to create a number of predefined chunks of memory (a memory pool) which can be doled out as needed. The tradeoff is that you cannot select how much memory you get in each chunk – you get a fixed size block. The porting layer allows you to adapt the benchmark to the type of memory allocation scheme used on the system being evaluated. Parallelism is another trait that you can exploit if your system supports it. You can build the CoreMark benchmark for parallel operation, specifying the number of contexts (threads or processes) to be spawned during execution. However, you should avoid using CoreMark for representing a processor’s multicore performance since this benchmark will most certainly scale 99.9 percent linearly due to its small size.

Making sense of the results

Of course, it is what you do with the benchmark results that can lead to confusion (intentional or otherwise). For this reason, EEMBC has imposed strict reporting requirements. The CoreMark website, http://www.coremark.org, has a place for reporting results, and you can not just enter the one CoreMark score. There are a few other critical variables that may affect your results.

- The biggest is the compiler used and the options set when compiling the benchmark. You must report that information when submitting results.

- A second major influencer is the means by which memory is allocated – you must report the approach taken if it is something other than heap (malloc).

- A third factor, when relevant, is parallelization. You must report the number of contexts created.

A look at some specific results can help show how the various required reporting elements correlate with different benchmark scores, and why it’s important to identify these elements when reporting your results. All of the numbers that follow come from the public list of results available on the CoreMark website.

The simple impact of compiler version can be seen in the results of Table 1. The Analog Devices processor shows a speedup of ten percent with a newer compiler version, presumably indicating that the new compiler is doing a better job. The Microchip example shows an even greater difference between two more distant versions of the GNU C compiler (gcc). For the two NXP processors, all of the compilers are subtle variations on the same version thereby minimizing the differences.

| Processor | Compiler | CoreMark/MHz |

| Analog Devices BF536 | gcc 4.1.2 | 1.01 |

| gcc 4.3.3 | 1.12 | |

| Microchip PIC32MX36F512L | gcc 3.4.4 MPLAB C32 v1.00-20071024 | 1.90 |

| gcc 4.3.2 (Sourcery G++ Lite 4.3-81) | 2.30 | |

| NXP LPC1114 | Keil ARMcc v4.0.0.524 | 1.06 |

| gcc 4.3.3 (Code Red) | 0.98 | |

| NXP LPC1768 | ARM CC 4.0 | 1.75 |

| Keil ARMCC v4.0.0.524 | 1.76 |

Table 1: Impact of different compilers on CoreMark results.

Even when using the same compiler, different settings will, of course, create different results as the compiler tries to optimize the program differently. Table 2 shows some example results.

| Processor | Compiler | Settings | CoreMark/MHz |

| Microchip PIC24JF64GA004 |

gcc 4.0.3 dsPIC030, Microchip v3_20 | -Os -mpa | 1.01 |

| -03 | 1.12 | ||

| Microchip PIC24HJ128GP202 |

gcc 4.0.3 dsPIC030, Microchip v3.12 | -03 | 1.86 |

| -mpa | 1.29 | ||

| Microchip PIC32MX36F512L |

gcc 4.4.4 MPLAB C32 v1.00-20071024 | -02 | 1.71 |

| -03 | 1.90 |

Table 2: Changing compiler settings yields different CoreMark results.

In the first example (PIC24JF64GA004), optimizing for smaller code size comes at a cost of reduced benchmark performance of about 10 percent. The difference is even more dramatic in the second case, running 30 percent slower when the procedural abstraction optimization flag (-mpa) is set. The difference between the compiler settings on the last processor also reflects differences in the amount of optimization, with approximately a 10 percent benefit from the greater speed optimizations provided by the –O3 setting.

The effects of memory can be seen in Table 3. In the first case, wait states are introduced when the clock frequency exceeds what the flash can handle, reducing the CoreMark/MHz number. Similarly, in the second case, the DRAM can’t keep up with the processor above 50 MHz, so the clock frequency ratio between the memory and processor goes down to 1:2, reducing the CoreMark/MHz number.

| Processor | Clock Speed |

Memory Notes | CoreMark/MHz | CoreMark |

| TI OMAP 3530 | 500 | Code in FLASH | 2.42 | 1210 |

| 600 | 2.19 | 1314 | ||

| TI Stellaris LM3S9B96 Cortex M3 | 50 | 1:1 Memory/CPU clock not possible beyond 50 Mhz | 1.92 | 96 |

| 80 | 1.60 | 128 |

Table 4: Effect of changing the cache size on CoreMark results.

However, in both of these cases, the increase in clock frequency was greater than the decrease in operational efficiency, so the raw CoreMark number still went up with the increase in clock frequency; it just didn’t increase as much as the frequency did.

Finally, the impact of cache size can be seen in Table 4. Here, the code fits in the 2 kb cache of the first configuration, but it fills the cache completely. None of the function parameters on the stack can fit, and so there will be some cache misses. In the second case, the cache has twice the capacity, meaning that it doesn’t suffer the same cache misses as in the first example, giving it a better score.

| Processor | Compiler | CoreMark/MHz |

| Analog Devices BF536 | gcc 4.1.2 | 1.01 |

Table 3: Effect of memory settings on CoreMark results.

Note that the second case has a five-stage pipeline, compared to the three-stage pipeline of the first case. A longer pipeline should cause performance to go down due to the extensive branching of the state machine example. A longer pipeline takes longer to refill when a branch is mis-predicted. So the higher CoreMark score indicates that the larger cache more than made up for this degradation.

The scores in all of these examples demonstrate two facts. First, a single number (in this case, CoreMark/MHz) can accurately represent the performance of the underlying microcontroller architecture; the latter examples bear that out clearly. Second, however, is the fact that context matters. The compiler can influence how well the code it generates performs. This should almost be obvious – people spend a lot of time developing and refining compilers to improve the results they create, but "good" results depend on whether your goal is fast code or small code. Faster code will run faster, smaller code will not (except in those cases where it actually helps optimize the memory or cache utilization).

The most important thing that these examples show, however, is that the differences between results have rational explanations. They’re not caused by some figment of the actual benchmarking code that might favor one microcontroller architecture over another, or by the outright dropping of code by the compiler. In that sense, the CoreMark benchmark is fair and balanced, giving a true reflection of the compiler and architecture, and only of the compiler and architecture.

免责声明:各个作者和/或论坛参与者在本网站发表的观点、看法和意见不代表 DigiKey 的观点、看法和意见,也不代表 DigiKey 官方政策。