Machine Vision Applications Draw Intelligence from Deep Learning Neural Networks

投稿人:DigiKey 欧洲编辑

2016-10-20

Artificial intelligence (AI) has long been the subject of science fiction writers and academics. The challenge to replicate the complexities of the human brain into a computer has spawned a new generation of scientists, mathematicians and computer algorithm developers. Continued research has now given way to the use of AI, more frequently referred to as deep learning or machine learning, applications that are becoming increasingly part of our world. While the basic concepts have been around for a long time, the commercial reality has never fully been realized. In more recent years, the rate that data is generated has rocketed and developers have had to think long and hard about how they can write algorithms to extract valuable data and statistics from it. Also, another critical factor has been the availability of highly scalable levels of computing resources, something the cloud has willingly yielded. For example, the smartphone in your pocket might use Google’s Now (‘OK Google’) or Apple’s Siri voice command applications which use the power of deep learning algorithms, called (artificial) neural networks to enable speech recognition and learning capabilities. However, beyond the fun and convenience of talking to your phone there are a whole host of industrial, automotive and commercial applications that are now benefiting from the power of deep learning neural networks.

The convolutional neural network

Before taking a look at some of those applications, let’s investigate how a neural network works and what resources it needs. When we talk generally about neural networks we should more accurately describe them as artificial neural networks. Implemented as software algorithms, they are based on the biological neural networks – the central nervous system – of humans and animals. There have been several different types of neural network architecture conceived over the years of which the convolutional neural network (CNN) has been the most widely adopted. One of the key reasons for this has been that their architectural approach makes them well suited for using the parallelization techniques offered by hardware accelerators based around GPU and FPGA devices. Another reason for the popularity of CNNs is that they suit working with data that has a lot of spatial continuity, of which image processing applications are a perfect fit. Spatial continuity refers to pixels in the vicinity of a specific location sharing similar intensity and color attributes. CNNs are architected out of different layers, each having a specific purpose, and there are two distinct phases used in their operation. The first part is an instruction, or training phase that allows the processing algorithm to understand what data it has and the relationship, or context between each piece of data. The CNN is created as a learning framework from the structured and unstructured data, with computer created neurons forming the network of connections and breaks. Pattern matching is a key concept behind a CNN, something that is used extensively in machine learning.

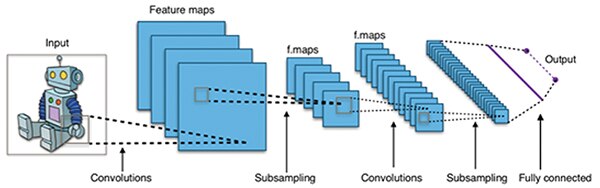

Figure 1: Layer approach of a CNN (Source: Wikipedia – credit Aphex341).

More insight to how a CNN works can be found in a paper from Cadence1a, together with a helpful video1b that was recorded at a recent IEEE Society conference on New Frontiers in Computing. Evidence to how useful a CNN is for image processing applications gained further respect when a Microsoft research team1c won the ImageNet computer vision challenge.

The second phase of a CNN is that of execution. Composed of different layer nodes, propositions as to what the likely image might be become increasingly abstract. A convolution layer extracts low-level features from the image source in order to detect features such as lines or edges within an image. Other layers, called pooling layers serve to reduce variations by averaging or ‘pooling’ common features for a particular region of an image. It can then be passed on to further convolution and pooling layers. The number of CNN layers correlates to the accuracy of the image recognition although this increases the system performance demand. The layers can operate in a parallel manner if the memory bandwidth is available, the most compute intensive part of the CNN being the convolution layers.

The challenge for developers is how they provision a compute resource high enough to run the CNN to identify the number of different image classifications necessary within the time constraints of an application. For example, an industrial automation application might use computer vision to identify which next stage a part needs to be routed to on a conveyor belt. Pausing the process until the neural network identifies the part would disrupt the flow and slow down production.

Implementing the CNN

Accelerating parts of the CNNs on-going ‘reinforcement’ learning and execution phases will significantly speed up this, largely, mathematical task. With their high memory bandwidth and compute capabilities, GPUs and FPGAs are potential candidates for the job. Conventional microprocessors, with the cache bottlenecks exhibited by any Von Neumann architecture, can run the algorithm but hand-off layer abstraction tasks to hardware accelerators. While GPUs and FPGAs both offer significant parallel processing capabilities, the nature of a GPUs fixed architecture means that an FPGA, with its flexible and dynamically reconfigurable architecture is more suitable for the task of CNN acceleration. With a very fine granular approach, essentially implementing CNN algorithms in hardware, the FPGAs architecture helps keep latencies to an absolute minimum and a lot more deterministic compared to the software-derived algorithm approach of a GPU. Another advantage of an FPGA is the ability to “harden” functional blocks, such as a DSP, within the device’s fabric; this approach further strengthens the deterministic nature of the network. In terms of resource usage the FPGA is very efficient, so each CNN layer can be constructed within the FPGA fabric and its own memory close to it.

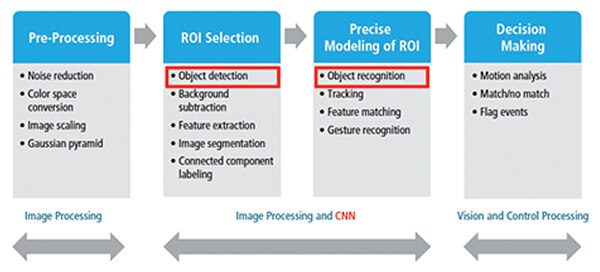

Figure 2: Overview of image processing CNN (Source: Cadence2).

Figure 2 highlights the key component blocks of a CNN designed for an industry image processing application, the two middle blocks representing the core of the CNN.

Development of FPGA-based CNN acceleration applications is complemented by the use of the OpenCL2a parallel programming extensions to the C language. An example of an FPGA device suitable for use in convolutional neural networks is the Arria 10 series of devices from Intel’s Programmable Solutions Group (PSG), this being formally known as Altera.

To assist developers embarking on an FPGA-based CNN acceleration project, Intel PSG provides a CNN reference design. This uses OpenCL kernels to implement each CNN layer. Data is passed from one layer to the next using channels and pipes, a function that allows data passing between OpenCL kernels without having to consume external memory bandwidth. The convolution layers are implemented using DSP blocks and logic in the FPGA. Hardened blocks include floating point functions that further increase device throughput without having to impact available memory bandwidth.

The block diagram illustrated in Figure 3 highlights an example image processing and classification system that could use FPGAs as the acceleration processing units.

Figure 3: Block diagram of image classification system that uses an FPGA for acceleration (Source: Deep Learning on FPGAs, Past, Present and Future3).

Intel PSG also hosts a number of useful documents and videos on the topic of using FPGA convolutional neural networks for industrial, medical and automotive applications on its website4. Selecting the framework for a deep learning application is one of the key first steps. To assist the developer there are a growing number of tools such as OpenANN (openann.github.io), an open source library for artificial neural networks together with informative community sites such as deeplearning.net and embedded-vision.com. Popular deep learning design frameworks that include support for using OpenCL include Caffe (caffe.berkeleyvision.org), based around C++ and Torch that is based around Lua. DeepCL is another framework that fully supports OpenCL although it has not yet gained the user base of the first two.

Conclusion

Industrial markets are keen to exploit the features that deep learning neural networks can bring to a host of manufacturing and automation control applications. This focus has gained further momentum thanks to initiatives such as Industry 4.0 in Germany, along with the broader-based concept of the Industrial Internet of Things. Coupled with a high quality vision camera, a CPU & FPGA solution and associated controls, a convolutional neural network can be used, for example, to automate a host of manufacturing process checks, improving product quality, reliability and factory safety.

With their dynamically configurable logic fabric, high memory bandwidth and power efficiency, FPGAs are ideal for accelerating the convolutional and pooling layers of a CNN. Supported by a host of community driven open source frameworks and parallel code libraries such as OpenCL, the future use of FPGAs for such applications is secure. FPGAs present a highly scalable and flexible solution to meet the differing application needs of many industrial sectors.

References:

- Figure 1 – Source Wikipedia - By Aphex34 - Own work, CC BY-SA 4.0.

- Using Convolutional Neural Networks for Image Recognition, by Samer Hijazi, Rishi Kumar, and Chris Rowen, IP Group, Cadence

- The Computing Earthquake: Neural Networks, Cognitive Layering,

- Microsoft researchers win ImageNet computer vision challenge,

- Figure 2 – Using Convolutional Neural Networks for Image Recognition

- The open standard for parallel programming of heterogeneous systems - https://www.khronos.org/opencl/

- Figure 3 – Deep Learning on FPGAs, Past, Present and Future - https://arxiv.org/abs/1602.04283

- Machine Learning

免责声明:各个作者和/或论坛参与者在本网站发表的观点、看法和意见不代表 DigiKey 的观点、看法和意见,也不代表 DigiKey 官方政策。